The Problem

Gun violence is chronically underreported in real time, especially when witnesses fear retaliation or assume someone else will call 911. That gap matters because the first minutes after a shooting shape whether victims get rapid trauma care, whether police find shell casings and witnesses, and whether investigators can connect incidents to the same firearm through ballistics databases.

Acoustic gunshot detection (AGD) systems—networks of microphones that detect impulsive sounds, triangulate location, and send alerts—promise to close that reporting gap by notifying police even when nobody calls. Cities have spent millions deploying these systems, yet many communities still debate whether they reduce harm, increase unnecessary police contact, or simply generate more calls for service without clearer outcomes.

Traditional approaches fall short because 911 reporting is inconsistent and often delayed, and officers arriving later may find little physical evidence left at the scene. Even when departments do respond, the “shots fired” call may be vague (“somewhere near the park”) rather than a precise address, which wastes time and can increase risky vehicle responses.

What Research Shows

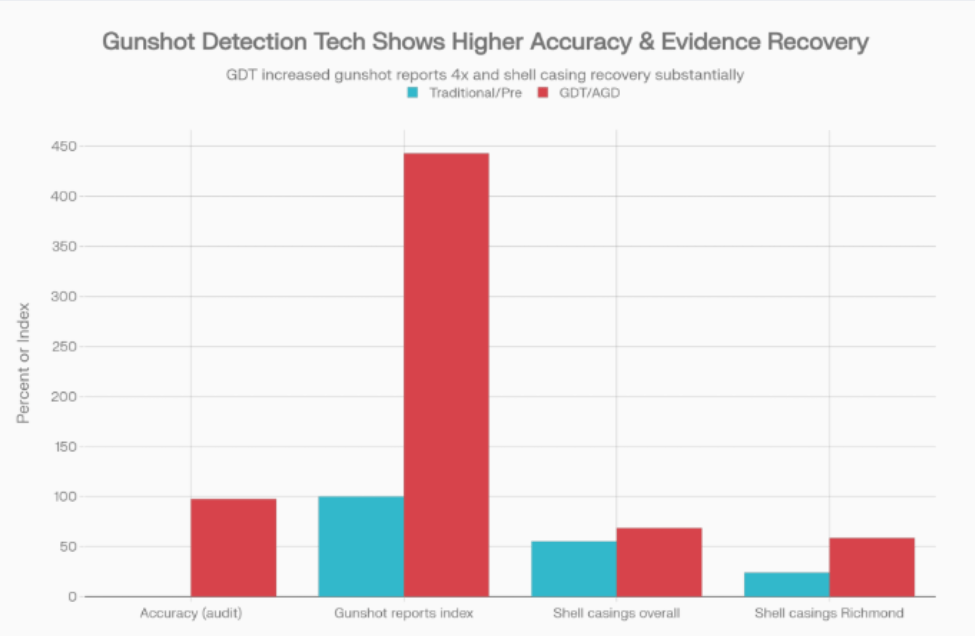

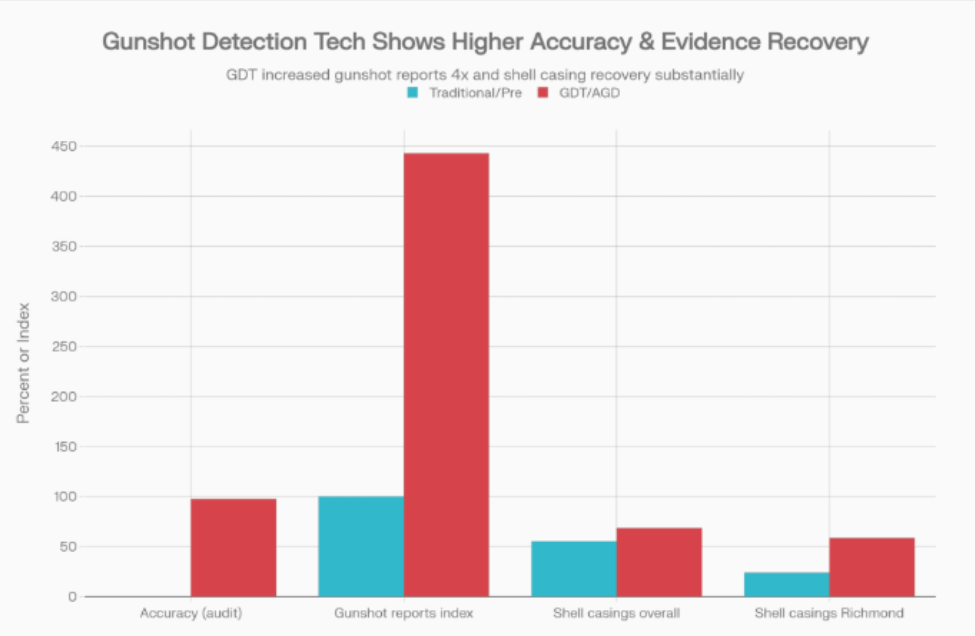

Acoustic gunshot detection (GDT/AGD) performance vs traditional reporting and pre-deployment baselines (audit accuracy, incident awareness proxy, and evidence recovery)

On retrospective performance, vendors and some audits report high classification accuracy for detecting and publishing gunfire alerts. An independent audit commissioned for ShotSpotter reported overall accuracy of 97.69% for 2019–2021 and 97.63% for 2022, with a “false positive” (published gunfire when the client later indicated none occurred) rate of 0.36% in 2022. Those figures suggest the core detection stack can be technically strong under the vendor’s definitions and client feedback loop.

Retrospective evaluations also show that AGD can expand incident awareness far beyond citizen calls. A cost-benefit analysis summary referenced by the vendor reports that, in Winston‑Salem, calls for service reporting gunshots averaged 7 per month before the system and 31 per month after implementation—about a 4.4× increase in “known” gunfire incidents entering dispatch workflows. That kind of lift is the whole point of informatics here: it changes what the system “knows,” not just how fast it reacts.

Evidence collection is another measurable benefit. An Urban Institute evaluation of gunshot detection technology reported that the percentage of cases with shell casings found at scenes increased from 55.3% before implementation to 68.5% after implementation across sites, with Richmond showing a larger jump from 24.1% to 58.6%. Since casings are critical for NIBIN-based firearm linkage, even modest increases can strengthen investigations.

Visualization 1 (comparison): A grouped bar chart contrasts AGD/GDT vs traditional or pre-deployment baselines across audit accuracy, an incident-awareness proxy (gunshot reports index), and shell-casing recovery overall and in Richmond.

Acoustic gunshot detection (GDT/AGD) performance vs traditional reporting and pre-deployment baselines (audit accuracy, incident awareness proxy, and evidence recovery)

What the Real World Shows

Operational impact looks most promising in time-to-response, but outcomes like survival and violence reduction are less consistent. A 2024 trauma-system study (“Detect, Dispatch, Drive”) reported that as ShotSpotter activation became common in a city’s firearm injury responses, police response time improved from 4 minutes to 2 minutes over 2016–2021 (P = 0.001), while EMS response time showed smaller or non-significant changes depending on subgroup comparisons. A 2025 narrative review in Military Medicine also notes civilian studies where AGD activation correlated with faster police and EMS responses (for example, police 3.7 ± 3.5 minutes vs 5.4 ± 5.7 minutes, and EMS 4.5 ± 2.0 minutes vs slower comparisons), but it emphasizes that evidence for improved survival remains unclear.

Controlled field evidence on public-safety outcomes is mixed. A Philadelphia evaluation of an acoustic gunshot detection system (SENTRI, not ShotSpotter) used a partially randomized, block-matched design and found gunshot-related incidents increased by about 259% after activation, but there was no significant increase in confirmed shootings—meaning workload rose without a measurable rise in verified gun-crime detections during the study period. That pattern—more alerts, uncertain downstream benefit—shows up repeatedly in debates about whether detection translates into deterrence, clearance, or reduced harm.

On synthesis evidence, the 2025 narrative review concludes AGD may shorten critical prehospital intervals but that translation into better clinical outcomes is inconsistent, and it calls for more outcome-focused prospective research and tighter integration into medical command-and-control workflows. Policy-facing reviews also highlight that “accuracy” can be reported in ways that exclude unverified events, creating a mismatch between technical metrics and what communities experience on the street.

Visualization 2 (outcomes): A clean outcomes chart can plot (a) police response time improvement from 4 to 2 minutes over 2016–2021 and (b) example interval differences cited in the 2025 review (3.7 vs 5.4 minutes for police), alongside (c) a “no consistent mortality benefit” indicator (reported as inconclusive rather than a single number).

The Implementation Gap

The biggest gap is that high detection accuracy does not guarantee high operational value. In practice, agencies must convert an alert into (1) a safe response, (2) evidence found, (3) a solvable case, and ideally (4) fewer future shootings—each step introduces friction. The Philadelphia study illustrates this: alerts surged, but confirmed shootings did not, which can burn officer time and create alert fatigue.

Second, “false positives” are not the only problem—“unverifiable” alerts are. Reviews note that many activations cannot be conclusively verified by police on arrival, and some critiques argue vendors may exclude these from false-positive counts, making accuracy feel better on paper than in day-to-day patrol. When officers repeatedly find nothing, they start downgrading responses, which undermines the very time-savings the system promised.

Third, AGD changes policing volume and patterns, which creates political and trust barriers even when the technology works technically. Critics argue that automated alerts can intensify patrol presence in specific neighborhoods, increasing stops and community tension even if those alerts don’t reliably lead to arrests or reduced violence. Several cities have paused or ended contracts amid concerns about effectiveness, transparency, and civil liberties, reinforcing the “pilot forever” pattern.

Finally, the costs are real and competing priorities are brutal. When budgets are tight, leaders ask why they should pay for more alerts if they can’t staff the response, expand forensic processing, or improve witness protection—investments that often drive clearance and prevention more directly.

Visualization 3 (implementation gap): An implementation-gap chart can show a funnel from “high measured detection accuracy” to “verified incidents,” to “evidence recovered,” to “clearance/safety outcomes,” with drop-offs illustrated using the Philadelphia result (large increase in incidents, no significant increase in confirmed shootings) and the review’s conclusion of inconsistent survival impact.

Where It Actually Works

AGD tends to work best when cities treat it as part of an end-to-end violence response system rather than a standalone sensor network. The Urban Institute evaluation’s improvements in shell-casing recovery suggest value when agencies use alerts to arrive quickly, secure scenes, and systematically search for ballistic evidence. Medical impact looks most plausible when AGD is paired with operational protocols that speed both police securing the scene and EMS access, rather than only increasing dispatch volume.

The Opportunity

The opportunity is to make AGD “boring infrastructure”: tightly evaluated, transparently governed, and integrated with investigative and medical workflows so extra alerts convert into real harm reduction.

- Publish standardized, independent performance metrics that include “unverified” activations, not just vendor-defined false positives.

- Integrate AGD alerts with evidence workflows (scene management, casing search protocols, NIBIN submissions) to sustain the evidence gains seen in evaluations.

- Pair AGD with trauma-response protocols and measure patient outcomes directly, since time savings alone have not reliably proven survival benefit.

- Use targeted deployments with clear success criteria (e.g., casing recovery, clearance improvements, response-time thresholds) and sunset clauses when criteria aren’t met.

- Build community governance and transparency into deployment decisions to reduce trust collapse that can derail adoption even when technical performance is strong.

References

Edgeworth Economics. “Independent Audit of the ShotSpotter Accuracy, 2019–2022.” (Audit report page).

Mares (cited in NIJ grant doc). Discussion of gunshot detection technology characteristics.

University of Michigan STPP. “Acoustic Gunshot Detection Systems.” Policy report (June 2022).

Jerry Ratcliffe. “Philadelphia Acoustic Gunshot Detection study” (SENTRI evaluation summary, partially randomized design).

Urban Institute technical summary PDF: “Evaluation of Gunshot Detection Technology…” (shell casing recovery pre/post).

“Detect, Dispatch, Drive: A Study of ShotSpotter Acoustic Technology…” PubMed entry (response times, 2016–2021).

Military Medicine (2025). “Impact of Acoustic Gunshot Detection Technology on Prehospital…” narrative review.

SAGE (2025). “An interdisciplinary examination of a gunshot detection system.”

SoundThinking “ShotSpotter public safety results” page (Winston‑Salem calls/month proxy; Camden response time example).

Restore the Fourth PDF compilation (public claims and critiques).

Leave a comment