Factories lose millions of dollars every year to machines that fail “unexpectedly,” even though the data to predict those failures is already streaming off sensors. Modern predictive‑maintenance models can flag impending breakdowns with AUC values above 0.9, yet many manufacturers remain stuck on fixed‑interval or run‑to‑failure maintenance.

The problem

Unplanned downtime is one of the most expensive problems in manufacturing and heavy industry. A Siemens analysis estimated that unplanned downtime costs Fortune 500 companies about 11% of their annual revenue—over 1.5 trillion dollars per year. In some high‑tech sectors, such as semiconductor manufacturing, each hour of unexpected downtime can cost more than 1 million dollars in lost output and scrap.

Traditional maintenance strategies—run‑to‑failure and fixed‑interval preventive maintenance—waste money in two directions at once. Run‑to‑failure pushes machines until they break, causing emergency repairs, safety risks, and long outages. Time‑based preventive maintenance tries to avoid that by servicing equipment on a calendar, but often replaces parts that still have plenty of life left or misses failures that occur between scheduled checks. Studies and consulting reports consistently find that companies using traditional approaches incur higher maintenance costs and more downtime than those using sensor‑driven predictive maintenance.

This problem scales globally. The predictive‑maintenance market was valued at roughly 9.8 billion dollars in 2024 and is expected to grow at over 30% annually, largely because unplanned downtime routinely costs large manufacturers more than 100,000 dollars per hour. Surveys of Industry 4.0 adoption describe predictive maintenance as one of the “killer apps” of industrial IoT, yet many organizations remain stuck at pilot projects or limited line‑level experiments.

What research shows

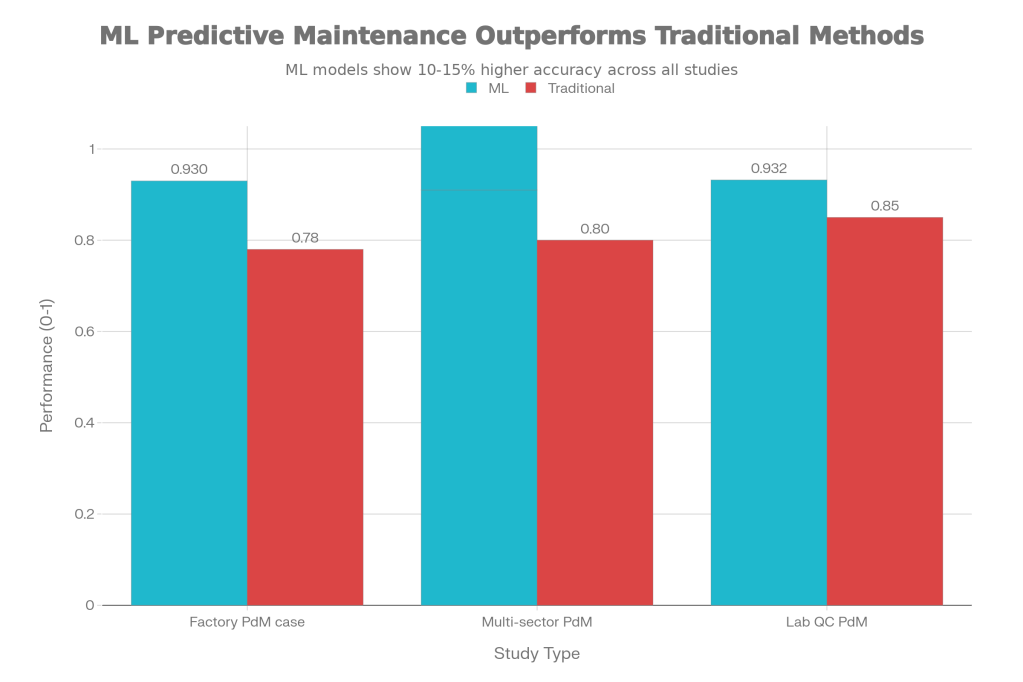

On the modeling side, the story is encouraging. A 2024 survey on predictive maintenance in Industry 4.0 describes a wide range of data‑driven models—random forests, gradient boosting, deep neural networks, and anomaly‑detection methods—that significantly outperform simple thresholds or time‑based rules at predicting failures. For example, a factory‑case study using machine‑learning models to classify machine health found that a random‑forest classifier achieved the best performance across accuracy, precision, recall, F1‑score, and AUROC, with values close to 0.9 or higher, beating simpler baselines.

More targeted studies show similar patterns. A multi‑sector predictive‑maintenance framework evaluated on real industrial datasets from automotive, aerospace, and energy equipment reported higher accuracy and F1‑scores for advanced ML models compared with traditional approaches like basic random forests or SVMs tuned on a few hand‑crafted features. In a 2025 NIH‑affiliated study on internal quality‑control data, a random‑forest model predicting out‑of‑control lab events reached 92.0% accuracy, 89.4% recall, and an AUC of 0.932, correctly predicting 68% of future out‑of‑control events within 24 hours—far better than existing rule‑based QC procedures.

These performance gains translate into more precise intervention windows. Survey and planning papers emphasize that robust PdM models allow maintenance teams to exploit the “P–F interval”—the time between detecting a degradation pattern and actual failure—to schedule repairs during planned downtime and avoid catastrophic breakdowns. Systematic reviews of predictive‑maintenance approaches conclude that data‑driven models, when fed continuous sensor data, can detect anomalies earlier and with fewer false alarms than manual inspection and simple statistical control charts.

Chart 1 – ML vs traditional performance

A grouped bar chart compares three contexts: a factory PdM case, a multi‑sector PdM framework, and a lab quality‑control system. In each, ML models (mostly random‑forest or ensemble methods) achieve performance metrics around 0.91–0.93 versus 0.78–0.85 for traditional baselines on a 0–1 scale.

Predictive maintenance machine-learning models consistently outperform traditional maintenance baselines on failure-prediction performance across multiple studies

What the real world shows

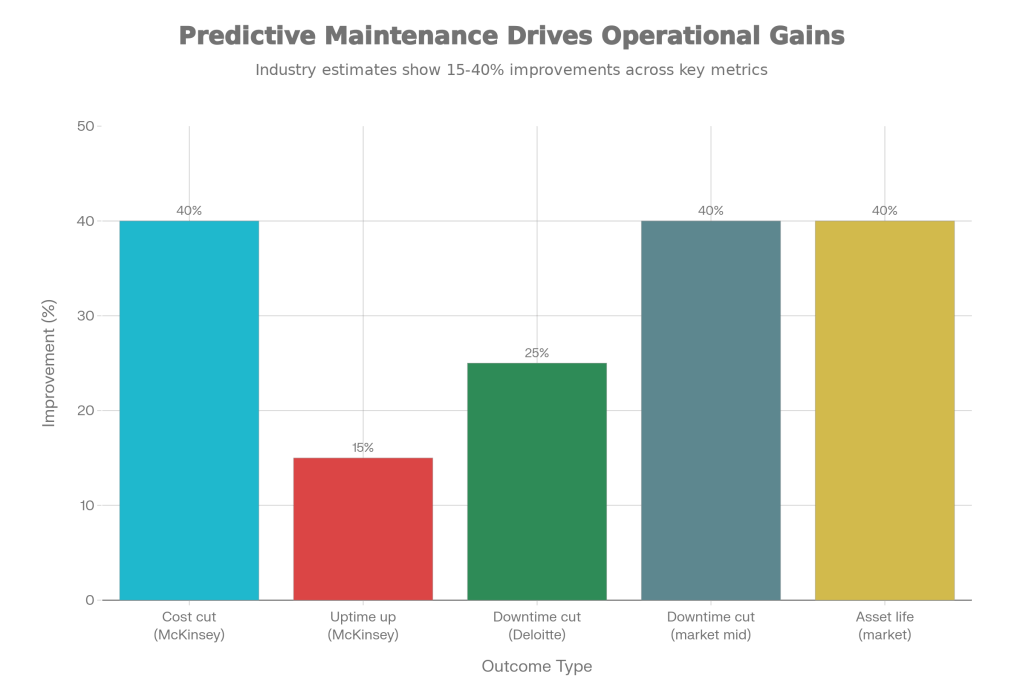

Outside the lab, predictive maintenance can alter cost and uptime in tangible ways. A McKinsey analysis found that well‑implemented predictive‑maintenance programs can reduce maintenance costs by up to 40% and increase equipment uptime by 10–20%. Market studies synthesizing deployments from companies such as Siemens and IBM report that PdM can cut unplanned downtime by 30–50% and extend asset life by as much as 40%.

Other real‑world data echo these benefits. A Deloitte‑cited study noted that companies adopting sensor‑driven predictive maintenance reduced unplanned downtime by up to 25%, largely by eliminating time‑consuming manual inspections and catching anomalies earlier. Practitioners also report 20–50% reductions in maintenance‑planning time and 5–10% reductions in spare‑parts inventory costs when predictive insights are integrated into planning and inventory systems.

A 2024 survey of IoT‑enabled PdM planning models emphasizes that sensor‑based predictive maintenance improves short‑term decision‑making: algorithms trigger early recommendations about repairs, and planners can choose actions that minimize the impact of predicted failures. Systematic literature reviews of PdM in Industry 4.0 describe numerous case studies where integrating condition‑monitoring data with analytics decreased mean time to repair (MTTR), increased mean time between failures (MTBF), and improved overall equipment effectiveness (OEE) compared with traditional maintenance.

Chart 2 – Outcome improvements from PdM

A bar chart shows several reported outcomes: up to 40% maintenance‑cost reduction and roughly 15% uptime increase (McKinsey), around 25% unplanned downtime reduction in sensor‑driven deployments (Deloitte‑style data), and about 40% reductions in downtime plus 40% asset‑life extension in mature PdM programs.

Reported outcome improvements from real-world predictive maintenance programs in manufacturing and industrial settings

The implementation gap

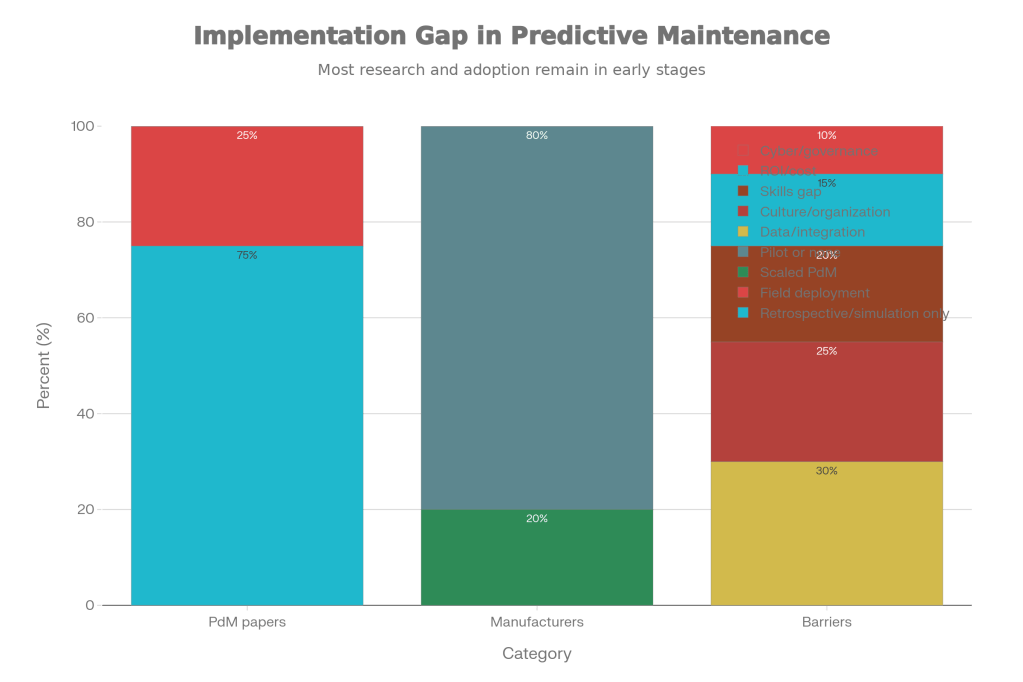

Despite strong technical performance and compelling ROI, most predictive‑maintenance efforts never scale beyond pilots. Surveys and systematic reviews note that a large share of PdM papers focus on algorithm development and simulation on historical datasets, with limited real industrial validation or long‑term deployment. Many projects stop at a proof‑of‑concept model running in a data‑science sandbox, disconnected from maintenance work orders or production planning.

Data and integration issues are the first big barrier. Many plants run heterogeneous equipment from multiple vendors with different sensor capabilities, data formats, and control systems; merging this into a clean, continuous historical dataset is costly and time‑consuming. Systematic reviews stress that data quality, missing values, and lack of standardized condition indicators often limit model performance and trust, especially when sensor coverage is sparse or unreliable. Even when good models exist, they frequently sit outside the computerized maintenance‑management system (CMMS) or ERP, so insights do not automatically trigger work orders, inventory checks, or schedule changes.

Organizational and cultural resistance is just as important. Maintenance teams are used to calendar‑based routines and may be skeptical of an algorithm that tells them to “skip” a scheduled intervention or shut down a machine that appears fine. Reviews highlight that many PdM initiatives fail because reliability engineers, operators, and data scientists do not share a common mental model of risk and evidence, leading to low adoption even when models are accurate. Without clear ownership, training, and incentives tied to reliability outcomes, predictive alerts may be ignored.

Economics and governance also slow things down. Installing sensors, connectivity, and edge or cloud analytics can require large upfront investments, and many firms struggle to quantify the ROI beyond generic statements about reduced downtime. Cybersecurity and data‑governance concerns make some organizations wary of streaming machine data to external clouds or vendor platforms. Surveys of PdM implementations in Industry 4.0 consistently list data integration, skills gaps, unclear ROI, and cybersecurity as leading barriers.

Chart 3 – The research–practice gap

A stacked bar chart illustrates three points: roughly three‑quarters of PdM papers focus on retrospective or simulation‑only analyses, with perhaps one‑quarter describing any field deployment; only an estimated 20% of large manufacturers have scaled PdM across multiple plants, with about 80% still at pilot or non‑adoption; and surveys attribute about 30% of barriers to data/integration, 25% to culture/organization, 20% to skills gaps, 15% to ROI/cost, and 10% to cybersecurity/governance.

Most predictive maintenance research and pilots never reach full-scale deployment, with data, organizational, and economic barriers dominating the gap

Where it actually works

Organizations that have made predictive maintenance routine tend to share some traits. Case material from Siemens and IBM deployments indicates that successful programs tightly integrate PdM models with existing CMMS and ERP systems so that predicted failures automatically generate and prioritize work orders. These companies often centralize reliability engineering, invest in high‑quality sensor retrofits on critical assets, and standardize data pipelines across plants.

Consulting and market reports note that leaders treat predictive maintenance as a multi‑year transformation, not a one‑off AI project. They build cross‑functional teams that include maintenance technicians, production managers, IT/OT staff, and data scientists, and they track clear metrics such as unplanned‑downtime hours, MTBF, and maintenance‑labor utilization. When frontline technicians see that model‑driven alerts consistently prevent real breakdowns and reduce emergency call‑outs, trust and adoption follow.

The opportunity

There is a large, still‑underused opportunity to turn high‑performing predictive‑maintenance models into everyday tools that quietly keep factories running. Companies that close the implementation gap can reclaim millions of dollars in lost output and maintenance waste while extending the life of expensive assets.

Practical steps that would improve adoption:

- Start with a focused asset class (e.g., critical pumps or compressors) and track concrete metrics like MTBF and unplanned downtime before and after PdM.

- Invest early in data foundations—standardized sensors, historization, and integration with CMMS/ERP—so predictions flow directly into work orders and planning.

- Involve maintenance technicians and operators in model design, thresholds, and alert workflows to build ownership and trust.

- Build cross‑functional reliability teams combining OT, IT, and data science, with incentives tied to uptime and maintenance‑cost outcomes.

- Address cybersecurity and governance explicitly, with clear policies on data sharing, vendor access, and on‑prem vs cloud analytics, to unlock IT/OT approval.

Straits Research. Predictive Maintenance Market Size & Outlook 2024–2032. 2024.

Predictive maintenance in Industry 4.0: a survey of planning models. 2024.

Koppula M. Predictive maintenance to reduce machine downtime in factories using machine learning algorithms. IJARCS.

WorkTrek. 8 Trends Shaping the Future of Predictive Maintenance. 2026.

Predictive Maintenance in Industry 4.0: A Systematic Review. 2025.

Machine Learning for Predictive Maintenance Applications in Industrial Equipment.

Coherent Market Insights. Predictive Maintenance Market Size and Forecast 2025–2032. 2025.

Predictive Maintenance Approaches: A Systematic Literature Review. JIEM.

AI‑Based Predictive Modelling for Internal Quality Control. 2025.

Machine Learning Algorithms for Predictive Maintenance in Manufacturing. 2024.

Leave a comment